diff --git a/FaceLandmarkDetection/Dockerfile b/FaceLandmarkDetection/Dockerfile

new file mode 100644

index 0000000000000000000000000000000000000000..39c5d4a5841ee70227428c47c8c02de4a588e150

--- /dev/null

+++ b/FaceLandmarkDetection/Dockerfile

@@ -0,0 +1,33 @@

+# Based on https://github.com/pytorch/pytorch/blob/master/Dockerfile

+FROM nvidia/cuda:9.2-cudnn7-devel-ubuntu16.04

+

+RUN apt-get update && apt-get install -y --no-install-recommends \

+ build-essential \

+ cmake \

+ git \

+ curl \

+ vim \

+ ca-certificates \

+ libboost-all-dev \

+ python-qt4 \

+ libjpeg-dev \

+ libpng-dev &&\

+ rm -rf /var/lib/apt/lists/*

+

+RUN curl -o ~/miniconda.sh -O https://repo.continuum.io/miniconda/Miniconda3-latest-Linux-x86_64.sh && \

+ chmod +x ~/miniconda.sh && \

+ ~/miniconda.sh -b -p /opt/conda && \

+ rm ~/miniconda.sh

+

+ENV PATH /opt/conda/bin:$PATH

+

+RUN conda config --set always_yes yes --set changeps1 no && conda update -q conda

+RUN conda install pytorch torchvision cuda92 -c pytorch

+

+# Install face-alignment package

+WORKDIR /workspace

+RUN chmod -R a+w /workspace

+RUN git clone https://github.com/1adrianb/face-alignment

+WORKDIR /workspace/face-alignment

+RUN pip install -r requirements.txt

+RUN python setup.py install

diff --git a/FaceLandmarkDetection/LICENSE b/FaceLandmarkDetection/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..ed4c6d1af07162de4ff7d0192fdbbf42acd77beb

--- /dev/null

+++ b/FaceLandmarkDetection/LICENSE

@@ -0,0 +1,29 @@

+BSD 3-Clause License

+

+Copyright (c) 2017, Adrian Bulat

+All rights reserved.

+

+Redistribution and use in source and binary forms, with or without

+modification, are permitted provided that the following conditions are met:

+

+* Redistributions of source code must retain the above copyright notice, this

+ list of conditions and the following disclaimer.

+

+* Redistributions in binary form must reproduce the above copyright notice,

+ this list of conditions and the following disclaimer in the documentation

+ and/or other materials provided with the distribution.

+

+* Neither the name of the copyright holder nor the names of its

+ contributors may be used to endorse or promote products derived from

+ this software without specific prior written permission.

+

+THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

+AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

+IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

+DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

+FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

+DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

+SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

+CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

+OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

+OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

diff --git a/FaceLandmarkDetection/README.md b/FaceLandmarkDetection/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..211370c6bc8954d4285ae5575b1a6401e19b9874

--- /dev/null

+++ b/FaceLandmarkDetection/README.md

@@ -0,0 +1,183 @@

+# Face Recognition

+

+Detect facial landmarks from Python using the world's most accurate face alignment network, capable of detecting points in both 2D and 3D coordinates.

+

+Build using [FAN](https://www.adrianbulat.com)'s state-of-the-art deep learning based face alignment method.

+

+

+

+**Note:** The lua version is available [here](https://github.com/1adrianb/2D-and-3D-face-alignment).

+

+For numerical evaluations it is highly recommended to use the lua version which uses indentical models with the ones evaluated in the paper. More models will be added soon.

+

+[](https://opensource.org/licenses/BSD-3-Clause) [](https://travis-ci.com/1adrianb/face-alignment) [](https://anaconda.org/1adrianb/face_alignment)

+[](https://pypi.org/project/face-alignment/)

+

+## Features

+

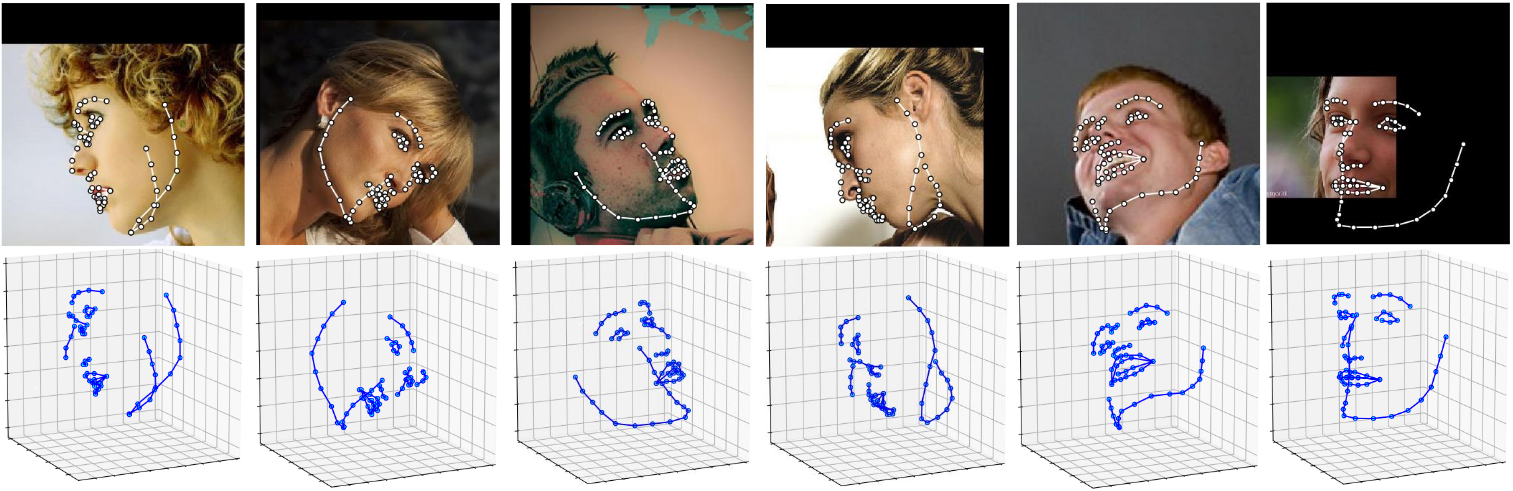

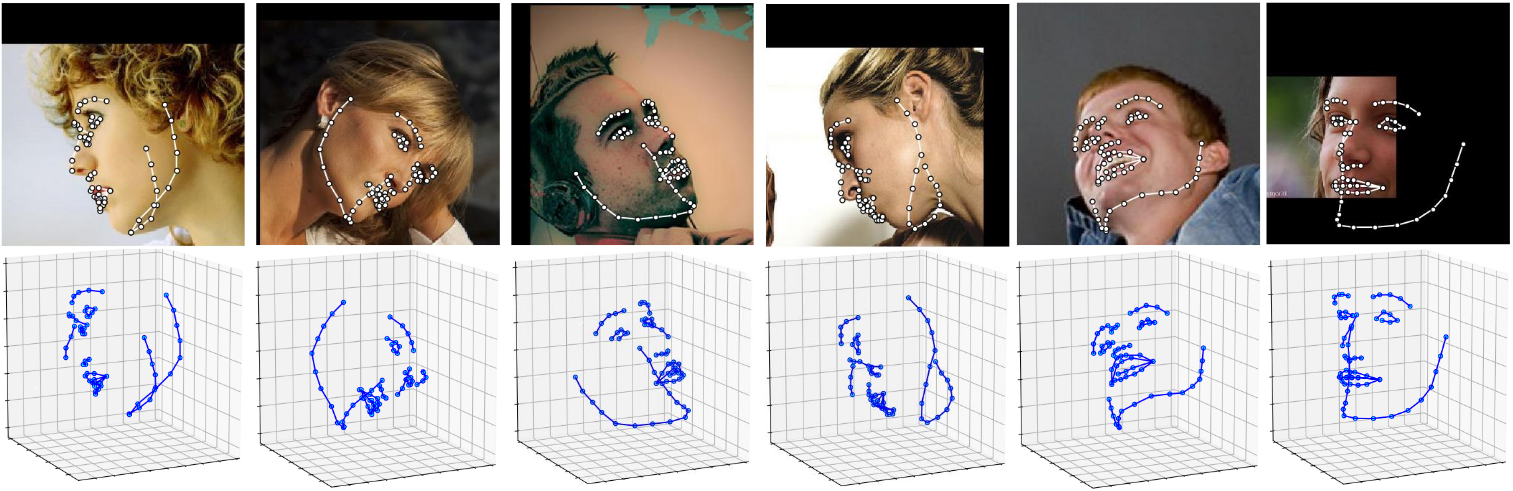

+#### Detect 2D facial landmarks in pictures

+

+

+ +

+

+

+```python

+import face_alignment

+from skimage import io

+

+fa = face_alignment.FaceAlignment(face_alignment.LandmarksType._2D, flip_input=False)

+

+input = io.imread('../test/assets/aflw-test.jpg')

+preds = fa.get_landmarks(input)

+```

+

+#### Detect 3D facial landmarks in pictures

+

+

+ +

+

+

+```python

+import face_alignment

+from skimage import io

+

+fa = face_alignment.FaceAlignment(face_alignment.LandmarksType._3D, flip_input=False)

+

+input = io.imread('../test/assets/aflw-test.jpg')

+preds = fa.get_landmarks(input)

+```

+

+#### Process an entire directory in one go

+

+```python

+import face_alignment

+from skimage import io

+

+fa = face_alignment.FaceAlignment(face_alignment.LandmarksType._2D, flip_input=False)

+

+preds = fa.get_landmarks_from_directory('../test/assets/')

+```

+

+#### Detect the landmarks using a specific face detector.

+

+By default the package will use the SFD face detector. However the users can alternatively use dlib or pre-existing ground truth bounding boxes.

+

+```python

+import face_alignment

+

+# sfd for SFD, dlib for Dlib and folder for existing bounding boxes.

+fa = face_alignment.FaceAlignment(face_alignment.LandmarksType._2D, face_detector='sfd')

+```

+

+#### Running on CPU/GPU

+In order to specify the device (GPU or CPU) on which the code will run one can explicitly pass the device flag:

+

+```python

+import face_alignment

+

+# cuda for CUDA

+fa = face_alignment.FaceAlignment(face_alignment.LandmarksType._2D, device='cpu')

+```

+

+Please also see the ``examples`` folder

+

+## Installation

+

+### Requirements

+

+* Python 3.5+ or Python 2.7 (it may work with other versions too)

+* Linux, Windows or macOS

+* pytorch (>=1.0)

+

+While not required, for optimal performance(especially for the detector) it is **highly** recommended to run the code using a CUDA enabled GPU.

+

+### Binaries

+

+The easiest way to install it is using either pip or conda:

+

+| **Using pip** | **Using conda** |

+|------------------------------|--------------------------------------------|

+| `pip install face-alignment` | `conda install -c 1adrianb face_alignment` |

+| | |

+

+Alternatively, bellow, you can find instruction to build it from source.

+

+### From source

+

+ Install pytorch and pytorch dependencies. Instructions taken from [pytorch readme](https://github.com/pytorch/pytorch). For a more updated version check the framework github page.

+

+ On Linux

+```bash

+export CMAKE_PREFIX_PATH="$(dirname $(which conda))/../" # [anaconda root directory]

+

+# Install basic dependencies

+conda install numpy pyyaml mkl setuptools cmake gcc cffi

+

+# Add LAPACK support for the GPU

+conda install -c soumith magma-cuda80 # or magma-cuda75 if CUDA 7.5

+```

+

+On OSX

+```bash

+export CMAKE_PREFIX_PATH=[anaconda root directory]

+conda install numpy pyyaml setuptools cmake cffi

+```

+#### Get the PyTorch source

+```bash

+git clone --recursive https://github.com/pytorch/pytorch

+```

+

+#### Install PyTorch

+On Linux

+```bash

+python setup.py install

+```

+

+On OSX

+```bash

+MACOSX_DEPLOYMENT_TARGET=10.9 CC=clang CXX=clang++ python setup.py install

+```

+

+#### Get the Face Alignment source code

+```bash

+git clone https://github.com/1adrianb/face-alignment

+```

+#### Install the Face Alignment lib

+```bash

+pip install -r requirements.txt

+python setup.py install

+```

+

+### Docker image

+

+A Dockerfile is provided to build images with cuda support and cudnn v5. For more instructions about running and building a docker image check the orginal Docker documentation.

+```

+docker build -t face-alignment .

+```

+

+## How does it work?

+

+While here the work is presented as a black-box, if you want to know more about the intrisecs of the method please check the original paper either on arxiv or my [webpage](https://www.adrianbulat.com).

+

+## Contributions

+

+All contributions are welcomed. If you encounter any issue (including examples of images where it fails) feel free to open an issue.

+

+## Citation

+

+```

+@inproceedings{bulat2017far,

+ title={How far are we from solving the 2D \& 3D Face Alignment problem? (and a dataset of 230,000 3D facial landmarks)},

+ author={Bulat, Adrian and Tzimiropoulos, Georgios},

+ booktitle={International Conference on Computer Vision},

+ year={2017}

+}

+```

+

+For citing dlib, pytorch or any other packages used here please check the original page of their respective authors.

+

+## Acknowledgements

+

+* To the [pytorch](http://pytorch.org/) team for providing such an awesome deeplearning framework

+* To [my supervisor](http://www.cs.nott.ac.uk/~pszyt/) for his patience and suggestions.

+* To all other python developers that made available the rest of the packages used in this repository.

diff --git a/FaceLandmarkDetection/docs/images/2dlandmarks.png b/FaceLandmarkDetection/docs/images/2dlandmarks.png

new file mode 100644

index 0000000000000000000000000000000000000000..f815722b4b22066e21fda9210400e86d36019f8a

Binary files /dev/null and b/FaceLandmarkDetection/docs/images/2dlandmarks.png differ

diff --git a/FaceLandmarkDetection/docs/images/face-alignment-adrian.gif b/FaceLandmarkDetection/docs/images/face-alignment-adrian.gif

new file mode 100644

index 0000000000000000000000000000000000000000..b6bb4224b922dfa04b7fa61805af65e56a9a08be

Binary files /dev/null and b/FaceLandmarkDetection/docs/images/face-alignment-adrian.gif differ

diff --git a/FaceLandmarkDetection/face_alignment/__init__.py b/FaceLandmarkDetection/face_alignment/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..4bae29fd5f85b41e4669302bd2603bc6924eddc7

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/__init__.py

@@ -0,0 +1,7 @@

+# -*- coding: utf-8 -*-

+

+__author__ = """Adrian Bulat"""

+__email__ = 'adrian.bulat@nottingham.ac.uk'

+__version__ = '1.0.1'

+

+from .api import FaceAlignment, LandmarksType, NetworkSize

diff --git a/FaceLandmarkDetection/face_alignment/__pycache__/__init__.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/__pycache__/__init__.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..26bc9e86be76e79e7a32a0c66f262f2581e87fb5

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/__pycache__/__init__.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/__pycache__/__init__.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/__pycache__/__init__.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..079f72e8c64729757200ce1dcbb9f62e36db71de

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/__pycache__/__init__.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/__pycache__/api.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/__pycache__/api.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..c8b0efd010573c3ec4fd536afe90d65547b0cbdc

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/__pycache__/api.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/__pycache__/api.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/__pycache__/api.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..cf659733951be5a40c9a60d2fe54a8eb18bfb97f

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/__pycache__/api.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/__pycache__/models.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/__pycache__/models.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..e3b480edcd636d96fe427e73d5d1006bd1e91b36

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/__pycache__/models.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/__pycache__/models.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/__pycache__/models.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..e3407599d10993ad2af893304eb58cc4e94456be

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/__pycache__/models.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/__pycache__/utils.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/__pycache__/utils.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..5b3f0648a7b85eea4c2d8eedcd5434a40238744b

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/__pycache__/utils.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/__pycache__/utils.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/__pycache__/utils.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..b85e82aaa020db2e651ee1f7ea67e71195a6f1c3

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/__pycache__/utils.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/api.py b/FaceLandmarkDetection/face_alignment/api.py

new file mode 100644

index 0000000000000000000000000000000000000000..a8a09a3095d08ef20c46550662766000d13d507c

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/api.py

@@ -0,0 +1,207 @@

+from __future__ import print_function

+import os

+import torch

+from torch.utils.model_zoo import load_url

+from enum import Enum

+from skimage import io

+from skimage import color

+import numpy as np

+import cv2

+try:

+ import urllib.request as request_file

+except BaseException:

+ import urllib as request_file

+

+from .models import FAN, ResNetDepth

+from .utils import *

+

+

+class LandmarksType(Enum):

+ """Enum class defining the type of landmarks to detect.

+

+ ``_2D`` - the detected points ``(x,y)`` are detected in a 2D space and follow the visible contour of the face

+ ``_2halfD`` - this points represent the projection of the 3D points into 3D

+ ``_3D`` - detect the points ``(x,y,z)``` in a 3D space

+

+ """

+ _2D = 1

+ _2halfD = 2

+ _3D = 3

+

+

+class NetworkSize(Enum):

+ # TINY = 1

+ # SMALL = 2

+ # MEDIUM = 3

+ LARGE = 4

+

+ def __new__(cls, value):

+ member = object.__new__(cls)

+ member._value_ = value

+ return member

+

+ def __int__(self):

+ return self.value

+

+models_urls = {

+ '2DFAN-4': 'https://www.adrianbulat.com/downloads/python-fan/2DFAN4-11f355bf06.pth.tar',

+ '3DFAN-4': 'https://www.adrianbulat.com/downloads/python-fan/3DFAN4-7835d9f11d.pth.tar',

+ 'depth': 'https://www.adrianbulat.com/downloads/python-fan/depth-2a464da4ea.pth.tar',

+}

+

+

+class FaceAlignment:

+ def __init__(self, landmarks_type, network_size=NetworkSize.LARGE,

+ device='cuda', flip_input=False, face_detector='sfd', verbose=False):

+ self.device = device

+ self.flip_input = flip_input

+ self.landmarks_type = landmarks_type

+ self.verbose = verbose

+

+ network_size = int(network_size)

+

+ if 'cuda' in device:

+ torch.backends.cudnn.benchmark = True

+

+ # Get the face detector

+ face_detector_module = __import__('face_alignment.detection.' + face_detector,

+ globals(), locals(), [face_detector], 0)

+ self.face_detector = face_detector_module.FaceDetector(device=device, verbose=verbose)

+

+ # Initialise the face alignemnt networks

+ self.face_alignment_net = FAN(network_size)

+ if landmarks_type == LandmarksType._2D:

+ network_name = '2DFAN-' + str(network_size)

+ else:

+ network_name = '3DFAN-' + str(network_size)

+

+ fan_weights = load_url(models_urls[network_name], map_location=lambda storage, loc: storage)

+ self.face_alignment_net.load_state_dict(fan_weights)

+

+ self.face_alignment_net.to(device)

+ self.face_alignment_net.eval()

+

+ # Initialiase the depth prediciton network

+ if landmarks_type == LandmarksType._3D:

+ self.depth_prediciton_net = ResNetDepth()

+

+ depth_weights = load_url(models_urls['depth'], map_location=lambda storage, loc: storage)

+ depth_dict = {

+ k.replace('module.', ''): v for k,

+ v in depth_weights['state_dict'].items()}

+ self.depth_prediciton_net.load_state_dict(depth_dict)

+

+ self.depth_prediciton_net.to(device)

+ self.depth_prediciton_net.eval()

+

+ def get_landmarks(self, image_or_path, detected_faces=None):

+ """Deprecated, please use get_landmarks_from_image

+

+ Arguments:

+ image_or_path {string or numpy.array or torch.tensor} -- The input image or path to it.

+

+ Keyword Arguments:

+ detected_faces {list of numpy.array} -- list of bounding boxes, one for each face found

+ in the image (default: {None})

+ """

+ return self.get_landmarks_from_image(image_or_path, detected_faces)

+

+ def get_landmarks_from_image(self, image_or_path, detected_faces=None):

+ """Predict the landmarks for each face present in the image.

+

+ This function predicts a set of 68 2D or 3D images, one for each image present.

+ If detect_faces is None the method will also run a face detector.

+

+ Arguments:

+ image_or_path {string or numpy.array or torch.tensor} -- The input image or path to it.

+

+ Keyword Arguments:

+ detected_faces {list of numpy.array} -- list of bounding boxes, one for each face found

+ in the image (default: {None})

+ """

+ if isinstance(image_or_path, str):

+ try:

+ image = io.imread(image_or_path)

+ except IOError:

+ print("error opening file :: ", image_or_path)

+ return None

+ else:

+ image = image_or_path

+

+ if image.ndim == 2:

+ image = color.gray2rgb(image)

+ elif image.ndim == 4:

+ image = image[..., :3]

+

+ if detected_faces is None:

+ detected_faces = self.face_detector.detect_from_image(image[..., ::-1].copy())

+

+ if len(detected_faces) == 0:

+ print("Warning: No faces were detected.")

+ return None

+

+ torch.set_grad_enabled(False)

+ landmarks = []

+ for i, d in enumerate(detected_faces):

+ center = torch.FloatTensor(

+ [d[2] - (d[2] - d[0]) / 2.0, d[3] - (d[3] - d[1]) / 2.0])

+ center[1] = center[1] - (d[3] - d[1]) * 0.12

+ scale = (d[2] - d[0] + d[3] - d[1]) / self.face_detector.reference_scale

+

+ inp = crop(image, center, scale)

+ inp = torch.from_numpy(inp.transpose(

+ (2, 0, 1))).float()

+

+ inp = inp.to(self.device)

+ inp.div_(255.0).unsqueeze_(0)

+

+ out = self.face_alignment_net(inp)[-1].detach()

+ if self.flip_input:

+ out += flip(self.face_alignment_net(flip(inp))

+ [-1].detach(), is_label=True)

+ out = out.cpu()

+

+ pts, pts_img = get_preds_fromhm(out, center, scale)

+ pts, pts_img = pts.view(68, 2) * 4, pts_img.view(68, 2)

+

+ if self.landmarks_type == LandmarksType._3D:

+ heatmaps = np.zeros((68, 256, 256), dtype=np.float32)

+ for i in range(68):

+ if pts[i, 0] > 0:

+ heatmaps[i] = draw_gaussian(

+ heatmaps[i], pts[i], 2)

+ heatmaps = torch.from_numpy(

+ heatmaps).unsqueeze_(0)

+

+ heatmaps = heatmaps.to(self.device)

+ depth_pred = self.depth_prediciton_net(

+ torch.cat((inp, heatmaps), 1)).data.cpu().view(68, 1)

+ pts_img = torch.cat(

+ (pts_img, depth_pred * (1.0 / (256.0 / (200.0 * scale)))), 1)

+

+ landmarks.append(pts_img.numpy())

+

+ return landmarks

+

+ def get_landmarks_from_directory(self, path, extensions=['.jpg', '.png'], recursive=True, show_progress_bar=True):

+ detected_faces = self.face_detector.detect_from_directory(path, extensions, recursive, show_progress_bar)

+

+ predictions = {}

+ for image_path, bounding_boxes in detected_faces.items():

+ image = io.imread(image_path)

+ preds = self.get_landmarks_from_image(image, bounding_boxes)

+ predictions[image_path] = preds

+

+ return predictions

+

+ @staticmethod

+ def remove_models(self):

+ base_path = os.path.join(appdata_dir('face_alignment'), "data")

+ for data_model in os.listdir(base_path):

+ file_path = os.path.join(base_path, data_model)

+ try:

+ if os.path.isfile(file_path):

+ print('Removing ' + data_model + ' ...')

+ os.unlink(file_path)

+ except Exception as e:

+ print(e)

diff --git a/FaceLandmarkDetection/face_alignment/detection/__init__.py b/FaceLandmarkDetection/face_alignment/detection/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..1a6b0402dae864a3cc5dc2a90a412fd842a0efc7

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/__init__.py

@@ -0,0 +1 @@

+from .core import FaceDetector

\ No newline at end of file

diff --git a/FaceLandmarkDetection/face_alignment/detection/__pycache__/__init__.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/detection/__pycache__/__init__.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..09ea4818d422fd3267003f907aad6a7b79fd829e

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/__pycache__/__init__.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/__pycache__/__init__.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/detection/__pycache__/__init__.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..605c422babf01756a23b39a4b869b24896a17586

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/__pycache__/__init__.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/__pycache__/core.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/detection/__pycache__/core.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..93d2a9d3998cf9c2facbbf5b6731d067c7193967

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/__pycache__/core.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/__pycache__/core.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/detection/__pycache__/core.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..95657db16b78133d1e8f86160cb96fe371b5ba16

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/__pycache__/core.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/core.py b/FaceLandmarkDetection/face_alignment/detection/core.py

new file mode 100644

index 0000000000000000000000000000000000000000..47a3f9d5fcf461843b2aafb75031f06be591c7dd

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/core.py

@@ -0,0 +1,131 @@

+import logging

+import glob

+from tqdm import tqdm

+import numpy as np

+import torch

+import cv2

+from skimage import io

+

+

+class FaceDetector(object):

+ """An abstract class representing a face detector.

+

+ Any other face detection implementation must subclass it. All subclasses

+ must implement ``detect_from_image``, that return a list of detected

+ bounding boxes. Optionally, for speed considerations detect from path is

+ recommended.

+ """

+

+ def __init__(self, device, verbose):

+ self.device = device

+ self.verbose = verbose

+

+ if verbose:

+ if 'cpu' in device:

+ logger = logging.getLogger(__name__)

+ logger.warning("Detection running on CPU, this may be potentially slow.")

+

+ if 'cpu' not in device and 'cuda' not in device:

+ if verbose:

+ logger.error("Expected values for device are: {cpu, cuda} but got: %s", device)

+ raise ValueError

+

+ def detect_from_image(self, tensor_or_path):

+ """Detects faces in a given image.

+

+ This function detects the faces present in a provided BGR(usually)

+ image. The input can be either the image itself or the path to it.

+

+ Arguments:

+ tensor_or_path {numpy.ndarray, torch.tensor or string} -- the path

+ to an image or the image itself.

+

+ Example::

+

+ >>> path_to_image = 'data/image_01.jpg'

+ ... detected_faces = detect_from_image(path_to_image)

+ [A list of bounding boxes (x1, y1, x2, y2)]

+ >>> image = cv2.imread(path_to_image)

+ ... detected_faces = detect_from_image(image)

+ [A list of bounding boxes (x1, y1, x2, y2)]

+

+ """

+ raise NotImplementedError

+

+ def detect_from_directory(self, path, extensions=['.jpg', '.png'], recursive=False, show_progress_bar=True):

+ """Detects faces from all the images present in a given directory.

+

+ Arguments:

+ path {string} -- a string containing a path that points to the folder containing the images

+

+ Keyword Arguments:

+ extensions {list} -- list of string containing the extensions to be

+ consider in the following format: ``.extension_name`` (default:

+ {['.jpg', '.png']}) recursive {bool} -- option wherever to scan the

+ folder recursively (default: {False}) show_progress_bar {bool} --

+ display a progressbar (default: {True})

+

+ Example:

+ >>> directory = 'data'

+ ... detected_faces = detect_from_directory(directory)

+ {A dictionary of [lists containing bounding boxes(x1, y1, x2, y2)]}

+

+ """

+ if self.verbose:

+ logger = logging.getLogger(__name__)

+

+ if len(extensions) == 0:

+ if self.verbose:

+ logger.error("Expected at list one extension, but none was received.")

+ raise ValueError

+

+ if self.verbose:

+ logger.info("Constructing the list of images.")

+ additional_pattern = '/**/*' if recursive else '/*'

+ files = []

+ for extension in extensions:

+ files.extend(glob.glob(path + additional_pattern + extension, recursive=recursive))

+

+ if self.verbose:

+ logger.info("Finished searching for images. %s images found", len(files))

+ logger.info("Preparing to run the detection.")

+

+ predictions = {}

+ for image_path in tqdm(files, disable=not show_progress_bar):

+ if self.verbose:

+ logger.info("Running the face detector on image: %s", image_path)

+ predictions[image_path] = self.detect_from_image(image_path)

+

+ if self.verbose:

+ logger.info("The detector was successfully run on all %s images", len(files))

+

+ return predictions

+

+ @property

+ def reference_scale(self):

+ raise NotImplementedError

+

+ @property

+ def reference_x_shift(self):

+ raise NotImplementedError

+

+ @property

+ def reference_y_shift(self):

+ raise NotImplementedError

+

+ @staticmethod

+ def tensor_or_path_to_ndarray(tensor_or_path, rgb=True):

+ """Convert path (represented as a string) or torch.tensor to a numpy.ndarray

+

+ Arguments:

+ tensor_or_path {numpy.ndarray, torch.tensor or string} -- path to the image, or the image itself

+ """

+ if isinstance(tensor_or_path, str):

+ return cv2.imread(tensor_or_path) if not rgb else io.imread(tensor_or_path)

+ elif torch.is_tensor(tensor_or_path):

+ # Call cpu in case its coming from cuda

+ return tensor_or_path.cpu().numpy()[..., ::-1].copy() if not rgb else tensor_or_path.cpu().numpy()

+ elif isinstance(tensor_or_path, np.ndarray):

+ return tensor_or_path[..., ::-1].copy() if not rgb else tensor_or_path

+ else:

+ raise TypeError

diff --git a/FaceLandmarkDetection/face_alignment/detection/dlib/__init__.py b/FaceLandmarkDetection/face_alignment/detection/dlib/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..d8e5ee5fd5b6dd145f3e3ec65c02d2d8befaef59

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/dlib/__init__.py

@@ -0,0 +1 @@

+from .dlib_detector import DlibDetector as FaceDetector

\ No newline at end of file

diff --git a/FaceLandmarkDetection/face_alignment/detection/dlib/dlib_detector.py b/FaceLandmarkDetection/face_alignment/detection/dlib/dlib_detector.py

new file mode 100644

index 0000000000000000000000000000000000000000..6cc8368aef0203753fad4637b4e3981281977fe6

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/dlib/dlib_detector.py

@@ -0,0 +1,68 @@

+import os

+import cv2

+import dlib

+

+try:

+ import urllib.request as request_file

+except BaseException:

+ import urllib as request_file

+

+from ..core import FaceDetector

+from ...utils import appdata_dir

+

+

+class DlibDetector(FaceDetector):

+ def __init__(self, device, path_to_detector=None, verbose=False):

+ super().__init__(device, verbose)

+

+ print('Warning: this detector is deprecated. Please use a different one, i.e.: S3FD.')

+ base_path = os.path.join(appdata_dir('face_alignment'), "data")

+

+ # Initialise the face detector

+ if 'cuda' in device:

+ if path_to_detector is None:

+ path_to_detector = os.path.join(

+ base_path, "mmod_human_face_detector.dat")

+

+ if not os.path.isfile(path_to_detector):

+ print("Downloading the face detection CNN. Please wait...")

+

+ path_to_temp_detector = os.path.join(

+ base_path, "mmod_human_face_detector.dat.download")

+

+ if os.path.isfile(path_to_temp_detector):

+ os.remove(os.path.join(path_to_temp_detector))

+

+ request_file.urlretrieve(

+ "https://www.adrianbulat.com/downloads/dlib/mmod_human_face_detector.dat",

+ os.path.join(path_to_temp_detector))

+

+ os.rename(os.path.join(path_to_temp_detector), os.path.join(path_to_detector))

+

+ self.face_detector = dlib.cnn_face_detection_model_v1(path_to_detector)

+ else:

+ self.face_detector = dlib.get_frontal_face_detector()

+

+ def detect_from_image(self, tensor_or_path):

+ image = self.tensor_or_path_to_ndarray(tensor_or_path, rgb=False)

+

+ detected_faces = self.face_detector(cv2.cvtColor(image, cv2.COLOR_BGR2GRAY))

+

+ if 'cuda' not in self.device:

+ detected_faces = [[d.left(), d.top(), d.right(), d.bottom()] for d in detected_faces]

+ else:

+ detected_faces = [[d.rect.left(), d.rect.top(), d.rect.right(), d.rect.bottom()] for d in detected_faces]

+

+ return detected_faces

+

+ @property

+ def reference_scale(self):

+ return 195

+

+ @property

+ def reference_x_shift(self):

+ return 0

+

+ @property

+ def reference_y_shift(self):

+ return 0

diff --git a/FaceLandmarkDetection/face_alignment/detection/folder/__init__.py b/FaceLandmarkDetection/face_alignment/detection/folder/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..0a9128ee0e13eb6f0058dbd480046aee50be336f

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/folder/__init__.py

@@ -0,0 +1 @@

+from .folder_detector import FolderDetector as FaceDetector

\ No newline at end of file

diff --git a/FaceLandmarkDetection/face_alignment/detection/folder/folder_detector.py b/FaceLandmarkDetection/face_alignment/detection/folder/folder_detector.py

new file mode 100644

index 0000000000000000000000000000000000000000..add19fa4e4b933619b75d1dce441e3071b235191

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/folder/folder_detector.py

@@ -0,0 +1,53 @@

+import os

+import numpy as np

+import torch

+

+from ..core import FaceDetector

+

+

+class FolderDetector(FaceDetector):

+ '''This is a simple helper module that assumes the faces were detected already

+ (either previously or are provided as ground truth).

+

+ The class expects to find the bounding boxes in the same format used by

+ the rest of face detectors, mainly ``list[(x1,y1,x2,y2),...]``.

+ For each image the detector will search for a file with the same name and with one of the

+ following extensions: .npy, .t7 or .pth

+

+ '''

+

+ def __init__(self, device, path_to_detector=None, verbose=False):

+ super(FolderDetector, self).__init__(device, verbose)

+

+ def detect_from_image(self, tensor_or_path):

+ # Only strings supported

+ if not isinstance(tensor_or_path, str):

+ raise ValueError

+

+ base_name = os.path.splitext(tensor_or_path)[0]

+

+ if os.path.isfile(base_name + '.npy'):

+ detected_faces = np.load(base_name + '.npy')

+ elif os.path.isfile(base_name + '.t7'):

+ detected_faces = torch.load(base_name + '.t7')

+ elif os.path.isfile(base_name + '.pth'):

+ detected_faces = torch.load(base_name + '.pth')

+ else:

+ raise FileNotFoundError

+

+ if not isinstance(detected_faces, list):

+ raise TypeError

+

+ return detected_faces

+

+ @property

+ def reference_scale(self):

+ return 195

+

+ @property

+ def reference_x_shift(self):

+ return 0

+

+ @property

+ def reference_y_shift(self):

+ return 0

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__init__.py b/FaceLandmarkDetection/face_alignment/detection/sfd/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..5a63ecd45658f22e66c171ada751fb33764d4559

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/sfd/__init__.py

@@ -0,0 +1 @@

+from .sfd_detector import SFDDetector as FaceDetector

\ No newline at end of file

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/__init__.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/__init__.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..223f1c8c50f26c44013f12edbb297b3f0822d817

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/__init__.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/__init__.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/__init__.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..71478176e62df158a6458d825eedf176cff1cb16

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/__init__.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/bbox.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/bbox.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..06a77197d502544882d7676b71e89525fab5eaab

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/bbox.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/bbox.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/bbox.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..315d9b9b15d5b094b864f7a4a40c863c5276af0e

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/bbox.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/detect.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/detect.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..37daedbfb169a7bf35c91a6d9abbf44d22c4c315

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/detect.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/detect.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/detect.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..a5954c1300f601d431745d74ef3cfded16b6980d

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/detect.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/net_s3fd.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/net_s3fd.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..0984c1e8a9c2bb8b78fc70c10f6fbc1f6cafc1aa

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/net_s3fd.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/net_s3fd.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/net_s3fd.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..bff6f907dbf2c33fdaa0062748bb28a76e8b65ad

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/net_s3fd.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/sfd_detector.cpython-36.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/sfd_detector.cpython-36.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..b1b5f8b065f4da5d65f432a774e5b08620da4723

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/sfd_detector.cpython-36.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/sfd_detector.cpython-37.pyc b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/sfd_detector.cpython-37.pyc

new file mode 100644

index 0000000000000000000000000000000000000000..33f1ccaeb437d82a166b0fb1603628525796c02f

Binary files /dev/null and b/FaceLandmarkDetection/face_alignment/detection/sfd/__pycache__/sfd_detector.cpython-37.pyc differ

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/bbox.py b/FaceLandmarkDetection/face_alignment/detection/sfd/bbox.py

new file mode 100644

index 0000000000000000000000000000000000000000..ca662365044608b992e7f15794bd2518b0781d7e

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/sfd/bbox.py

@@ -0,0 +1,109 @@

+from __future__ import print_function

+import os

+import sys

+import cv2

+import random

+import datetime

+import time

+import math

+import argparse

+import numpy as np

+import torch

+

+try:

+ from iou import IOU

+except BaseException:

+ # IOU cython speedup 10x

+ def IOU(ax1, ay1, ax2, ay2, bx1, by1, bx2, by2):

+ sa = abs((ax2 - ax1) * (ay2 - ay1))

+ sb = abs((bx2 - bx1) * (by2 - by1))

+ x1, y1 = max(ax1, bx1), max(ay1, by1)

+ x2, y2 = min(ax2, bx2), min(ay2, by2)

+ w = x2 - x1

+ h = y2 - y1

+ if w < 0 or h < 0:

+ return 0.0

+ else:

+ return 1.0 * w * h / (sa + sb - w * h)

+

+

+def bboxlog(x1, y1, x2, y2, axc, ayc, aww, ahh):

+ xc, yc, ww, hh = (x2 + x1) / 2, (y2 + y1) / 2, x2 - x1, y2 - y1

+ dx, dy = (xc - axc) / aww, (yc - ayc) / ahh

+ dw, dh = math.log(ww / aww), math.log(hh / ahh)

+ return dx, dy, dw, dh

+

+

+def bboxloginv(dx, dy, dw, dh, axc, ayc, aww, ahh):

+ xc, yc = dx * aww + axc, dy * ahh + ayc

+ ww, hh = math.exp(dw) * aww, math.exp(dh) * ahh

+ x1, x2, y1, y2 = xc - ww / 2, xc + ww / 2, yc - hh / 2, yc + hh / 2

+ return x1, y1, x2, y2

+

+

+def nms(dets, thresh):

+ if 0 == len(dets):

+ return []

+ x1, y1, x2, y2, scores = dets[:, 0], dets[:, 1], dets[:, 2], dets[:, 3], dets[:, 4]

+ areas = (x2 - x1 + 1) * (y2 - y1 + 1)

+ order = scores.argsort()[::-1]

+

+ keep = []

+ while order.size > 0:

+ i = order[0]

+ keep.append(i)

+ xx1, yy1 = np.maximum(x1[i], x1[order[1:]]), np.maximum(y1[i], y1[order[1:]])

+ xx2, yy2 = np.minimum(x2[i], x2[order[1:]]), np.minimum(y2[i], y2[order[1:]])

+

+ w, h = np.maximum(0.0, xx2 - xx1 + 1), np.maximum(0.0, yy2 - yy1 + 1)

+ ovr = w * h / (areas[i] + areas[order[1:]] - w * h)

+

+ inds = np.where(ovr <= thresh)[0]

+ order = order[inds + 1]

+

+ return keep

+

+

+def encode(matched, priors, variances):

+ """Encode the variances from the priorbox layers into the ground truth boxes

+ we have matched (based on jaccard overlap) with the prior boxes.

+ Args:

+ matched: (tensor) Coords of ground truth for each prior in point-form

+ Shape: [num_priors, 4].

+ priors: (tensor) Prior boxes in center-offset form

+ Shape: [num_priors,4].

+ variances: (list[float]) Variances of priorboxes

+ Return:

+ encoded boxes (tensor), Shape: [num_priors, 4]

+ """

+

+ # dist b/t match center and prior's center

+ g_cxcy = (matched[:, :2] + matched[:, 2:]) / 2 - priors[:, :2]

+ # encode variance

+ g_cxcy /= (variances[0] * priors[:, 2:])

+ # match wh / prior wh

+ g_wh = (matched[:, 2:] - matched[:, :2]) / priors[:, 2:]

+ g_wh = torch.log(g_wh) / variances[1]

+ # return target for smooth_l1_loss

+ return torch.cat([g_cxcy, g_wh], 1) # [num_priors,4]

+

+

+def decode(loc, priors, variances):

+ """Decode locations from predictions using priors to undo

+ the encoding we did for offset regression at train time.

+ Args:

+ loc (tensor): location predictions for loc layers,

+ Shape: [num_priors,4]

+ priors (tensor): Prior boxes in center-offset form.

+ Shape: [num_priors,4].

+ variances: (list[float]) Variances of priorboxes

+ Return:

+ decoded bounding box predictions

+ """

+

+ boxes = torch.cat((

+ priors[:, :2] + loc[:, :2] * variances[0] * priors[:, 2:],

+ priors[:, 2:] * torch.exp(loc[:, 2:] * variances[1])), 1)

+ boxes[:, :2] -= boxes[:, 2:] / 2

+ boxes[:, 2:] += boxes[:, :2]

+ return boxes

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/detect.py b/FaceLandmarkDetection/face_alignment/detection/sfd/detect.py

new file mode 100644

index 0000000000000000000000000000000000000000..84d120de15145a830938a2edcf9cf8d29bc59f72

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/sfd/detect.py

@@ -0,0 +1,75 @@

+import torch

+import torch.nn.functional as F

+

+import os

+import sys

+import cv2

+import random

+import datetime

+import math

+import argparse

+import numpy as np

+

+import scipy.io as sio

+import zipfile

+from .net_s3fd import s3fd

+from .bbox import *

+

+

+def detect(net, img, device):

+ img = img - np.array([104, 117, 123])

+ img = img.transpose(2, 0, 1)

+ img = img.reshape((1,) + img.shape)

+

+ if 'cuda' in device:

+ torch.backends.cudnn.benchmark = True

+

+ img = torch.from_numpy(img).float().to(device)

+ BB, CC, HH, WW = img.size()

+ with torch.no_grad():

+ olist = net(img)

+

+ bboxlist = []

+ for i in range(len(olist) // 2):

+ olist[i * 2] = F.softmax(olist[i * 2], dim=1)

+ olist = [oelem.data.cpu() for oelem in olist]

+ for i in range(len(olist) // 2):

+ ocls, oreg = olist[i * 2], olist[i * 2 + 1]

+ FB, FC, FH, FW = ocls.size() # feature map size

+ stride = 2**(i + 2) # 4,8,16,32,64,128

+ anchor = stride * 4

+ poss = zip(*np.where(ocls[:, 1, :, :] > 0.05))

+ for Iindex, hindex, windex in poss:

+ axc, ayc = stride / 2 + windex * stride, stride / 2 + hindex * stride

+ score = ocls[0, 1, hindex, windex]

+ loc = oreg[0, :, hindex, windex].contiguous().view(1, 4)

+ priors = torch.Tensor([[axc / 1.0, ayc / 1.0, stride * 4 / 1.0, stride * 4 / 1.0]])

+ variances = [0.1, 0.2]

+ box = decode(loc, priors, variances)

+ x1, y1, x2, y2 = box[0] * 1.0

+ # cv2.rectangle(imgshow,(int(x1),int(y1)),(int(x2),int(y2)),(0,0,255),1)

+ bboxlist.append([x1, y1, x2, y2, score])

+ bboxlist = np.array(bboxlist)

+ if 0 == len(bboxlist):

+ bboxlist = np.zeros((1, 5))

+

+ return bboxlist

+

+

+def flip_detect(net, img, device):

+ img = cv2.flip(img, 1)

+ b = detect(net, img, device)

+

+ bboxlist = np.zeros(b.shape)

+ bboxlist[:, 0] = img.shape[1] - b[:, 2]

+ bboxlist[:, 1] = b[:, 1]

+ bboxlist[:, 2] = img.shape[1] - b[:, 0]

+ bboxlist[:, 3] = b[:, 3]

+ bboxlist[:, 4] = b[:, 4]

+ return bboxlist

+

+

+def pts_to_bb(pts):

+ min_x, min_y = np.min(pts, axis=0)

+ max_x, max_y = np.max(pts, axis=0)

+ return np.array([min_x, min_y, max_x, max_y])

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/net_s3fd.py b/FaceLandmarkDetection/face_alignment/detection/sfd/net_s3fd.py

new file mode 100644

index 0000000000000000000000000000000000000000..fc64313c277ab594d0257585c70f147606693452

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/sfd/net_s3fd.py

@@ -0,0 +1,129 @@

+import torch

+import torch.nn as nn

+import torch.nn.functional as F

+

+

+class L2Norm(nn.Module):

+ def __init__(self, n_channels, scale=1.0):

+ super(L2Norm, self).__init__()

+ self.n_channels = n_channels

+ self.scale = scale

+ self.eps = 1e-10

+ self.weight = nn.Parameter(torch.Tensor(self.n_channels))

+ self.weight.data *= 0.0

+ self.weight.data += self.scale

+

+ def forward(self, x):

+ norm = x.pow(2).sum(dim=1, keepdim=True).sqrt() + self.eps

+ x = x / norm * self.weight.view(1, -1, 1, 1)

+ return x

+

+

+class s3fd(nn.Module):

+ def __init__(self):

+ super(s3fd, self).__init__()

+ self.conv1_1 = nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1)

+ self.conv1_2 = nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1)

+

+ self.conv2_1 = nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1)

+ self.conv2_2 = nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1)

+

+ self.conv3_1 = nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1)

+ self.conv3_2 = nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1)

+ self.conv3_3 = nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1)

+

+ self.conv4_1 = nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=1)

+ self.conv4_2 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

+ self.conv4_3 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

+

+ self.conv5_1 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

+ self.conv5_2 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

+ self.conv5_3 = nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1)

+

+ self.fc6 = nn.Conv2d(512, 1024, kernel_size=3, stride=1, padding=3)

+ self.fc7 = nn.Conv2d(1024, 1024, kernel_size=1, stride=1, padding=0)

+

+ self.conv6_1 = nn.Conv2d(1024, 256, kernel_size=1, stride=1, padding=0)

+ self.conv6_2 = nn.Conv2d(256, 512, kernel_size=3, stride=2, padding=1)

+

+ self.conv7_1 = nn.Conv2d(512, 128, kernel_size=1, stride=1, padding=0)

+ self.conv7_2 = nn.Conv2d(128, 256, kernel_size=3, stride=2, padding=1)

+

+ self.conv3_3_norm = L2Norm(256, scale=10)

+ self.conv4_3_norm = L2Norm(512, scale=8)

+ self.conv5_3_norm = L2Norm(512, scale=5)

+

+ self.conv3_3_norm_mbox_conf = nn.Conv2d(256, 4, kernel_size=3, stride=1, padding=1)

+ self.conv3_3_norm_mbox_loc = nn.Conv2d(256, 4, kernel_size=3, stride=1, padding=1)

+ self.conv4_3_norm_mbox_conf = nn.Conv2d(512, 2, kernel_size=3, stride=1, padding=1)

+ self.conv4_3_norm_mbox_loc = nn.Conv2d(512, 4, kernel_size=3, stride=1, padding=1)

+ self.conv5_3_norm_mbox_conf = nn.Conv2d(512, 2, kernel_size=3, stride=1, padding=1)

+ self.conv5_3_norm_mbox_loc = nn.Conv2d(512, 4, kernel_size=3, stride=1, padding=1)

+

+ self.fc7_mbox_conf = nn.Conv2d(1024, 2, kernel_size=3, stride=1, padding=1)

+ self.fc7_mbox_loc = nn.Conv2d(1024, 4, kernel_size=3, stride=1, padding=1)

+ self.conv6_2_mbox_conf = nn.Conv2d(512, 2, kernel_size=3, stride=1, padding=1)

+ self.conv6_2_mbox_loc = nn.Conv2d(512, 4, kernel_size=3, stride=1, padding=1)

+ self.conv7_2_mbox_conf = nn.Conv2d(256, 2, kernel_size=3, stride=1, padding=1)

+ self.conv7_2_mbox_loc = nn.Conv2d(256, 4, kernel_size=3, stride=1, padding=1)

+

+ def forward(self, x):

+ h = F.relu(self.conv1_1(x))

+ h = F.relu(self.conv1_2(h))

+ h = F.max_pool2d(h, 2, 2)

+

+ h = F.relu(self.conv2_1(h))

+ h = F.relu(self.conv2_2(h))

+ h = F.max_pool2d(h, 2, 2)

+

+ h = F.relu(self.conv3_1(h))

+ h = F.relu(self.conv3_2(h))

+ h = F.relu(self.conv3_3(h))

+ f3_3 = h

+ h = F.max_pool2d(h, 2, 2)

+

+ h = F.relu(self.conv4_1(h))

+ h = F.relu(self.conv4_2(h))

+ h = F.relu(self.conv4_3(h))

+ f4_3 = h

+ h = F.max_pool2d(h, 2, 2)

+

+ h = F.relu(self.conv5_1(h))

+ h = F.relu(self.conv5_2(h))

+ h = F.relu(self.conv5_3(h))

+ f5_3 = h

+ h = F.max_pool2d(h, 2, 2)

+

+ h = F.relu(self.fc6(h))

+ h = F.relu(self.fc7(h))

+ ffc7 = h

+ h = F.relu(self.conv6_1(h))

+ h = F.relu(self.conv6_2(h))

+ f6_2 = h

+ h = F.relu(self.conv7_1(h))

+ h = F.relu(self.conv7_2(h))

+ f7_2 = h

+

+ f3_3 = self.conv3_3_norm(f3_3)

+ f4_3 = self.conv4_3_norm(f4_3)

+ f5_3 = self.conv5_3_norm(f5_3)

+

+ cls1 = self.conv3_3_norm_mbox_conf(f3_3)

+ reg1 = self.conv3_3_norm_mbox_loc(f3_3)

+ cls2 = self.conv4_3_norm_mbox_conf(f4_3)

+ reg2 = self.conv4_3_norm_mbox_loc(f4_3)

+ cls3 = self.conv5_3_norm_mbox_conf(f5_3)

+ reg3 = self.conv5_3_norm_mbox_loc(f5_3)

+ cls4 = self.fc7_mbox_conf(ffc7)

+ reg4 = self.fc7_mbox_loc(ffc7)

+ cls5 = self.conv6_2_mbox_conf(f6_2)

+ reg5 = self.conv6_2_mbox_loc(f6_2)

+ cls6 = self.conv7_2_mbox_conf(f7_2)

+ reg6 = self.conv7_2_mbox_loc(f7_2)

+

+ # max-out background label

+ chunk = torch.chunk(cls1, 4, 1)

+ bmax = torch.max(torch.max(chunk[0], chunk[1]), chunk[2])

+ cls1 = torch.cat([bmax, chunk[3]], dim=1)

+

+ return [cls1, reg1, cls2, reg2, cls3, reg3, cls4, reg4, cls5, reg5, cls6, reg6]

diff --git a/FaceLandmarkDetection/face_alignment/detection/sfd/sfd_detector.py b/FaceLandmarkDetection/face_alignment/detection/sfd/sfd_detector.py

new file mode 100644

index 0000000000000000000000000000000000000000..29c7768558b83dceea8f94e4d859b71dfa54cc85

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/detection/sfd/sfd_detector.py

@@ -0,0 +1,51 @@

+import os

+import cv2

+from torch.utils.model_zoo import load_url

+

+from ..core import FaceDetector

+

+from .net_s3fd import s3fd

+from .bbox import *

+from .detect import *

+

+models_urls = {

+ 's3fd': 'https://www.adrianbulat.com/downloads/python-fan/s3fd-619a316812.pth',

+}

+

+

+class SFDDetector(FaceDetector):

+ def __init__(self, device, path_to_detector=None, verbose=False):

+ super(SFDDetector, self).__init__(device, verbose)

+

+ # Initialise the face detector

+ if path_to_detector is None:

+ model_weights = load_url(models_urls['s3fd'])

+ else:

+ model_weights = torch.load(path_to_detector)

+

+ self.face_detector = s3fd()

+ self.face_detector.load_state_dict(model_weights)

+ self.face_detector.to(device)

+ self.face_detector.eval()

+

+ def detect_from_image(self, tensor_or_path):

+ image = self.tensor_or_path_to_ndarray(tensor_or_path)

+

+ bboxlist = detect(self.face_detector, image, device=self.device)

+ keep = nms(bboxlist, 0.3)

+ bboxlist = bboxlist[keep, :]

+ bboxlist = [x for x in bboxlist if x[-1] > 0.5]

+

+ return bboxlist

+

+ @property

+ def reference_scale(self):

+ return 195

+

+ @property

+ def reference_x_shift(self):

+ return 0

+

+ @property

+ def reference_y_shift(self):

+ return 0

diff --git a/FaceLandmarkDetection/face_alignment/models.py b/FaceLandmarkDetection/face_alignment/models.py

new file mode 100644

index 0000000000000000000000000000000000000000..ee2dde32bdf72c25a4600e48efa73ffc0d4a3893

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/models.py

@@ -0,0 +1,261 @@

+import torch

+import torch.nn as nn

+import torch.nn.functional as F

+import math

+

+

+def conv3x3(in_planes, out_planes, strd=1, padding=1, bias=False):

+ "3x3 convolution with padding"

+ return nn.Conv2d(in_planes, out_planes, kernel_size=3,

+ stride=strd, padding=padding, bias=bias)

+

+

+class ConvBlock(nn.Module):

+ def __init__(self, in_planes, out_planes):

+ super(ConvBlock, self).__init__()

+ self.bn1 = nn.BatchNorm2d(in_planes)

+ self.conv1 = conv3x3(in_planes, int(out_planes / 2))

+ self.bn2 = nn.BatchNorm2d(int(out_planes / 2))

+ self.conv2 = conv3x3(int(out_planes / 2), int(out_planes / 4))

+ self.bn3 = nn.BatchNorm2d(int(out_planes / 4))

+ self.conv3 = conv3x3(int(out_planes / 4), int(out_planes / 4))

+

+ if in_planes != out_planes:

+ self.downsample = nn.Sequential(

+ nn.BatchNorm2d(in_planes),

+ nn.ReLU(True),

+ nn.Conv2d(in_planes, out_planes,

+ kernel_size=1, stride=1, bias=False),

+ )

+ else:

+ self.downsample = None

+

+ def forward(self, x):

+ residual = x

+

+ out1 = self.bn1(x)

+ out1 = F.relu(out1, True)

+ out1 = self.conv1(out1)

+

+ out2 = self.bn2(out1)

+ out2 = F.relu(out2, True)

+ out2 = self.conv2(out2)

+

+ out3 = self.bn3(out2)

+ out3 = F.relu(out3, True)

+ out3 = self.conv3(out3)

+

+ out3 = torch.cat((out1, out2, out3), 1)

+

+ if self.downsample is not None:

+ residual = self.downsample(residual)

+

+ out3 += residual

+

+ return out3

+

+

+class Bottleneck(nn.Module):

+

+ expansion = 4

+

+ def __init__(self, inplanes, planes, stride=1, downsample=None):

+ super(Bottleneck, self).__init__()

+ self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)

+ self.bn1 = nn.BatchNorm2d(planes)

+ self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride,

+ padding=1, bias=False)

+ self.bn2 = nn.BatchNorm2d(planes)

+ self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False)

+ self.bn3 = nn.BatchNorm2d(planes * 4)

+ self.relu = nn.ReLU(inplace=True)

+ self.downsample = downsample

+ self.stride = stride

+

+ def forward(self, x):

+ residual = x

+

+ out = self.conv1(x)

+ out = self.bn1(out)

+ out = self.relu(out)

+

+ out = self.conv2(out)

+ out = self.bn2(out)

+ out = self.relu(out)

+

+ out = self.conv3(out)

+ out = self.bn3(out)

+

+ if self.downsample is not None:

+ residual = self.downsample(x)

+

+ out += residual

+ out = self.relu(out)

+

+ return out

+

+

+class HourGlass(nn.Module):

+ def __init__(self, num_modules, depth, num_features):

+ super(HourGlass, self).__init__()

+ self.num_modules = num_modules

+ self.depth = depth

+ self.features = num_features

+

+ self._generate_network(self.depth)

+

+ def _generate_network(self, level):

+ self.add_module('b1_' + str(level), ConvBlock(self.features, self.features))

+

+ self.add_module('b2_' + str(level), ConvBlock(self.features, self.features))

+

+ if level > 1:

+ self._generate_network(level - 1)

+ else:

+ self.add_module('b2_plus_' + str(level), ConvBlock(self.features, self.features))

+

+ self.add_module('b3_' + str(level), ConvBlock(self.features, self.features))

+

+ def _forward(self, level, inp):

+ # Upper branch

+ up1 = inp

+ up1 = self._modules['b1_' + str(level)](up1)

+

+ # Lower branch

+ low1 = F.avg_pool2d(inp, 2, stride=2)

+ low1 = self._modules['b2_' + str(level)](low1)

+

+ if level > 1:

+ low2 = self._forward(level - 1, low1)

+ else:

+ low2 = low1

+ low2 = self._modules['b2_plus_' + str(level)](low2)

+

+ low3 = low2

+ low3 = self._modules['b3_' + str(level)](low3)

+

+ up2 = F.interpolate(low3, scale_factor=2, mode='nearest')

+

+ return up1 + up2

+

+ def forward(self, x):

+ return self._forward(self.depth, x)

+

+

+class FAN(nn.Module):

+

+ def __init__(self, num_modules=1):

+ super(FAN, self).__init__()

+ self.num_modules = num_modules

+

+ # Base part

+ self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3)

+ self.bn1 = nn.BatchNorm2d(64)

+ self.conv2 = ConvBlock(64, 128)

+ self.conv3 = ConvBlock(128, 128)

+ self.conv4 = ConvBlock(128, 256)

+

+ # Stacking part

+ for hg_module in range(self.num_modules):

+ self.add_module('m' + str(hg_module), HourGlass(1, 4, 256))

+ self.add_module('top_m_' + str(hg_module), ConvBlock(256, 256))

+ self.add_module('conv_last' + str(hg_module),

+ nn.Conv2d(256, 256, kernel_size=1, stride=1, padding=0))

+ self.add_module('bn_end' + str(hg_module), nn.BatchNorm2d(256))

+ self.add_module('l' + str(hg_module), nn.Conv2d(256,

+ 68, kernel_size=1, stride=1, padding=0))

+

+ if hg_module < self.num_modules - 1:

+ self.add_module(

+ 'bl' + str(hg_module), nn.Conv2d(256, 256, kernel_size=1, stride=1, padding=0))

+ self.add_module('al' + str(hg_module), nn.Conv2d(68,

+ 256, kernel_size=1, stride=1, padding=0))

+

+ def forward(self, x):

+ x = F.relu(self.bn1(self.conv1(x)), True)

+ x = F.avg_pool2d(self.conv2(x), 2, stride=2)

+ x = self.conv3(x)

+ x = self.conv4(x)

+

+ previous = x

+

+ outputs = []

+ for i in range(self.num_modules):

+ hg = self._modules['m' + str(i)](previous)

+

+ ll = hg

+ ll = self._modules['top_m_' + str(i)](ll)

+

+ ll = F.relu(self._modules['bn_end' + str(i)]

+ (self._modules['conv_last' + str(i)](ll)), True)

+

+ # Predict heatmaps

+ tmp_out = self._modules['l' + str(i)](ll)

+ outputs.append(tmp_out)

+

+ if i < self.num_modules - 1:

+ ll = self._modules['bl' + str(i)](ll)

+ tmp_out_ = self._modules['al' + str(i)](tmp_out)

+ previous = previous + ll + tmp_out_

+

+ return outputs

+

+

+class ResNetDepth(nn.Module):

+

+ def __init__(self, block=Bottleneck, layers=[3, 8, 36, 3], num_classes=68):

+ self.inplanes = 64

+ super(ResNetDepth, self).__init__()

+ self.conv1 = nn.Conv2d(3 + 68, 64, kernel_size=7, stride=2, padding=3,

+ bias=False)

+ self.bn1 = nn.BatchNorm2d(64)

+ self.relu = nn.ReLU(inplace=True)

+ self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

+ self.layer1 = self._make_layer(block, 64, layers[0])

+ self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

+ self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

+ self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

+ self.avgpool = nn.AvgPool2d(7)

+ self.fc = nn.Linear(512 * block.expansion, num_classes)

+

+ for m in self.modules():

+ if isinstance(m, nn.Conv2d):

+ n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

+ m.weight.data.normal_(0, math.sqrt(2. / n))

+ elif isinstance(m, nn.BatchNorm2d):

+ m.weight.data.fill_(1)

+ m.bias.data.zero_()

+

+ def _make_layer(self, block, planes, blocks, stride=1):

+ downsample = None

+ if stride != 1 or self.inplanes != planes * block.expansion:

+ downsample = nn.Sequential(

+ nn.Conv2d(self.inplanes, planes * block.expansion,

+ kernel_size=1, stride=stride, bias=False),

+ nn.BatchNorm2d(planes * block.expansion),

+ )

+

+ layers = []

+ layers.append(block(self.inplanes, planes, stride, downsample))

+ self.inplanes = planes * block.expansion

+ for i in range(1, blocks):

+ layers.append(block(self.inplanes, planes))

+

+ return nn.Sequential(*layers)

+

+ def forward(self, x):

+ x = self.conv1(x)

+ x = self.bn1(x)

+ x = self.relu(x)

+ x = self.maxpool(x)

+

+ x = self.layer1(x)

+ x = self.layer2(x)

+ x = self.layer3(x)

+ x = self.layer4(x)

+

+ x = self.avgpool(x)

+ x = x.view(x.size(0), -1)

+ x = self.fc(x)

+

+ return x

diff --git a/FaceLandmarkDetection/face_alignment/utils.py b/FaceLandmarkDetection/face_alignment/utils.py

new file mode 100644

index 0000000000000000000000000000000000000000..619570d580d65c84ba60ee043ad381a850f1ab26

--- /dev/null

+++ b/FaceLandmarkDetection/face_alignment/utils.py

@@ -0,0 +1,274 @@

+from __future__ import print_function

+import os

+import sys

+import time

+import torch

+import math

+import numpy as np

+import cv2

+

+

+def _gaussian(

+ size=3, sigma=0.25, amplitude=1, normalize=False, width=None,

+ height=None, sigma_horz=None, sigma_vert=None, mean_horz=0.5,

+ mean_vert=0.5):

+ # handle some defaults

+ if width is None:

+ width = size

+ if height is None:

+ height = size

+ if sigma_horz is None:

+ sigma_horz = sigma

+ if sigma_vert is None:

+ sigma_vert = sigma

+ center_x = mean_horz * width + 0.5

+ center_y = mean_vert * height + 0.5

+ gauss = np.empty((height, width), dtype=np.float32)

+ # generate kernel

+ for i in range(height):

+ for j in range(width):

+ gauss[i][j] = amplitude * math.exp(-(math.pow((j + 1 - center_x) / (

+ sigma_horz * width), 2) / 2.0 + math.pow((i + 1 - center_y) / (sigma_vert * height), 2) / 2.0))

+ if normalize:

+ gauss = gauss / np.sum(gauss)

+ return gauss

+

+

+def draw_gaussian(image, point, sigma):

+ # Check if the gaussian is inside

+ ul = [math.floor(point[0] - 3 * sigma), math.floor(point[1] - 3 * sigma)]

+ br = [math.floor(point[0] + 3 * sigma), math.floor(point[1] + 3 * sigma)]

+ if (ul[0] > image.shape[1] or ul[1] > image.shape[0] or br[0] < 1 or br[1] < 1):

+ return image

+ size = 6 * sigma + 1

+ g = _gaussian(size)

+ g_x = [int(max(1, -ul[0])), int(min(br[0], image.shape[1])) - int(max(1, ul[0])) + int(max(1, -ul[0]))]

+ g_y = [int(max(1, -ul[1])), int(min(br[1], image.shape[0])) - int(max(1, ul[1])) + int(max(1, -ul[1]))]

+ img_x = [int(max(1, ul[0])), int(min(br[0], image.shape[1]))]

+ img_y = [int(max(1, ul[1])), int(min(br[1], image.shape[0]))]

+ assert (g_x[0] > 0 and g_y[1] > 0)

+ image[img_y[0] - 1:img_y[1], img_x[0] - 1:img_x[1]

+ ] = image[img_y[0] - 1:img_y[1], img_x[0] - 1:img_x[1]] + g[g_y[0] - 1:g_y[1], g_x[0] - 1:g_x[1]]

+ image[image > 1] = 1

+ return image

+

+

+def transform(point, center, scale, resolution, invert=False):

+ """Generate and affine transformation matrix.

+

+ Given a set of points, a center, a scale and a targer resolution, the

+ function generates and affine transformation matrix. If invert is ``True``

+ it will produce the inverse transformation.

+

+ Arguments:

+ point {torch.tensor} -- the input 2D point

+ center {torch.tensor or numpy.array} -- the center around which to perform the transformations

+ scale {float} -- the scale of the face/object

+ resolution {float} -- the output resolution

+

+ Keyword Arguments:

+ invert {bool} -- define wherever the function should produce the direct or the

+ inverse transformation matrix (default: {False})

+ """

+ _pt = torch.ones(3)

+ _pt[0] = point[0]

+ _pt[1] = point[1]

+

+ h = 200.0 * scale

+ t = torch.eye(3)

+ t[0, 0] = resolution / h

+ t[1, 1] = resolution / h

+ t[0, 2] = resolution * (-center[0] / h + 0.5)

+ t[1, 2] = resolution * (-center[1] / h + 0.5)

+

+ if invert:

+ t = torch.inverse(t)

+

+ new_point = (torch.matmul(t, _pt))[0:2]

+

+ return new_point.int()

+

+

+def crop(image, center, scale, resolution=256.0):

+ """Center crops an image or set of heatmaps

+

+ Arguments:

+ image {numpy.array} -- an rgb image

+ center {numpy.array} -- the center of the object, usually the same as of the bounding box

+ scale {float} -- scale of the face

+

+ Keyword Arguments:

+ resolution {float} -- the size of the output cropped image (default: {256.0})

+

+ Returns:

+ [type] -- [description]

+ """ # Crop around the center point

+ """ Crops the image around the center. Input is expected to be an np.ndarray """

+ ul = transform([1, 1], center, scale, resolution, True)

+ br = transform([resolution, resolution], center, scale, resolution, True)

+ # pad = math.ceil(torch.norm((ul - br).float()) / 2.0 - (br[0] - ul[0]) / 2.0)

+ if image.ndim > 2:

+ newDim = np.array([br[1] - ul[1], br[0] - ul[0],

+ image.shape[2]], dtype=np.int32)

+ newImg = np.zeros(newDim, dtype=np.uint8)

+ else:

+ newDim = np.array([br[1] - ul[1], br[0] - ul[0]], dtype=np.int)

+ newImg = np.zeros(newDim, dtype=np.uint8)

+ ht = image.shape[0]

+ wd = image.shape[1]

+ newX = np.array(

+ [max(1, -ul[0] + 1), min(br[0], wd) - ul[0]], dtype=np.int32)

+ newY = np.array(

+ [max(1, -ul[1] + 1), min(br[1], ht) - ul[1]], dtype=np.int32)

+ oldX = np.array([max(1, ul[0] + 1), min(br[0], wd)], dtype=np.int32)

+ oldY = np.array([max(1, ul[1] + 1), min(br[1], ht)], dtype=np.int32)

+ newImg[newY[0] - 1:newY[1], newX[0] - 1:newX[1]

+ ] = image[oldY[0] - 1:oldY[1], oldX[0] - 1:oldX[1], :]

+ newImg = cv2.resize(newImg, dsize=(int(resolution), int(resolution)),

+ interpolation=cv2.INTER_LINEAR)

+ return newImg

+

+

+def get_preds_fromhm(hm, center=None, scale=None):

+ """Obtain (x,y) coordinates given a set of N heatmaps. If the center

+ and the scale is provided the function will return the points also in

+ the original coordinate frame.

+

+ Arguments:

+ hm {torch.tensor} -- the predicted heatmaps, of shape [B, N, W, H]

+

+ Keyword Arguments:

+ center {torch.tensor} -- the center of the bounding box (default: {None})

+ scale {float} -- face scale (default: {None})

+ """

+ max, idx = torch.max(

+ hm.view(hm.size(0), hm.size(1), hm.size(2) * hm.size(3)), 2)

+ idx += 1

+ preds = idx.view(idx.size(0), idx.size(1), 1).repeat(1, 1, 2).float()

+ preds[..., 0].apply_(lambda x: (x - 1) % hm.size(3) + 1)

+ preds[..., 1].add_(-1).div_(hm.size(2)).floor_().add_(1)

+

+ for i in range(preds.size(0)):

+ for j in range(preds.size(1)):

+ hm_ = hm[i, j, :]

+ pX, pY = int(preds[i, j, 0]) - 1, int(preds[i, j, 1]) - 1

+ if pX > 0 and pX < 63 and pY > 0 and pY < 63:

+ diff = torch.FloatTensor(

+ [hm_[pY, pX + 1] - hm_[pY, pX - 1],

+ hm_[pY + 1, pX] - hm_[pY - 1, pX]])

+ preds[i, j].add_(diff.sign_().mul_(.25))

+

+ preds.add_(-.5)

+

+ preds_orig = torch.zeros(preds.size())

+ if center is not None and scale is not None:

+ for i in range(hm.size(0)):

+ for j in range(hm.size(1)):

+ preds_orig[i, j] = transform(

+ preds[i, j], center, scale, hm.size(2), True)

+

+ return preds, preds_orig

+

+

+def shuffle_lr(parts, pairs=None):

+ """Shuffle the points left-right according to the axis of symmetry

+ of the object.

+

+ Arguments:

+ parts {torch.tensor} -- a 3D or 4D object containing the

+ heatmaps.

+

+ Keyword Arguments:

+ pairs {list of integers} -- [order of the flipped points] (default: {None})

+ """

+ if pairs is None:

+ pairs = [16, 15, 14, 13, 12, 11, 10, 9, 8, 7, 6, 5, 4, 3, 2, 1, 0,

+ 26, 25, 24, 23, 22, 21, 20, 19, 18, 17, 27, 28, 29, 30, 35,

+ 34, 33, 32, 31, 45, 44, 43, 42, 47, 46, 39, 38, 37, 36, 41,

+ 40, 54, 53, 52, 51, 50, 49, 48, 59, 58, 57, 56, 55, 64, 63,

+ 62, 61, 60, 67, 66, 65]

+ if parts.ndimension() == 3:

+ parts = parts[pairs, ...]

+ else:

+ parts = parts[:, pairs, ...]

+

+ return parts

+

+

+def flip(tensor, is_label=False):

+ """Flip an image or a set of heatmaps left-right

+

+ Arguments:

+ tensor {numpy.array or torch.tensor} -- [the input image or heatmaps]

+

+ Keyword Arguments:

+ is_label {bool} -- [denote wherever the input is an image or a set of heatmaps ] (default: {False})

+ """

+ if not torch.is_tensor(tensor):

+ tensor = torch.from_numpy(tensor)

+

+ if is_label:

+ tensor = shuffle_lr(tensor).flip(tensor.ndimension() - 1)

+ else:

+ tensor = tensor.flip(tensor.ndimension() - 1)

+

+ return tensor

+

+# From pyzolib/paths.py (https://bitbucket.org/pyzo/pyzolib/src/tip/paths.py)

+

+

+def appdata_dir(appname=None, roaming=False):

+ """ appdata_dir(appname=None, roaming=False)

+

+ Get the path to the application directory, where applications are allowed

+ to write user specific files (e.g. configurations). For non-user specific

+ data, consider using common_appdata_dir().

+ If appname is given, a subdir is appended (and created if necessary).

+ If roaming is True, will prefer a roaming directory (Windows Vista/7).

+ """

+

+ # Define default user directory

+ userDir = os.getenv('FACEALIGNMENT_USERDIR', None)

+ if userDir is None:

+ userDir = os.path.expanduser('~')

+ if not os.path.isdir(userDir): # pragma: no cover

+ userDir = '/var/tmp' # issue #54

+

+ # Get system app data dir

+ path = None

+ if sys.platform.startswith('win'):

+ path1, path2 = os.getenv('LOCALAPPDATA'), os.getenv('APPDATA')

+ path = (path2 or path1) if roaming else (path1 or path2)

+ elif sys.platform.startswith('darwin'):

+ path = os.path.join(userDir, 'Library', 'Application Support')

+ # On Linux and as fallback

+ if not (path and os.path.isdir(path)):

+ path = userDir

+

+ # Maybe we should store things local to the executable (in case of a

+ # portable distro or a frozen application that wants to be portable)

+ prefix = sys.prefix

+ if getattr(sys, 'frozen', None):

+ prefix = os.path.abspath(os.path.dirname(sys.executable))

+ for reldir in ('settings', '../settings'):

+ localpath = os.path.abspath(os.path.join(prefix, reldir))

+ if os.path.isdir(localpath): # pragma: no cover

+ try:

+ open(os.path.join(localpath, 'test.write'), 'wb').close()

+ os.remove(os.path.join(localpath, 'test.write'))

+ except IOError:

+ pass # We cannot write in this directory

+ else:

+ path = localpath

+ break

+

+ # Get path specific for this app

+ if appname:

+ if path == userDir:

+ appname = '.' + appname.lstrip('.') # Make it a hidden directory

+ path = os.path.join(path, appname)

+ if not os.path.isdir(path): # pragma: no cover

+ os.mkdir(path)

+

+ # Done

+ return path

diff --git a/FaceLandmarkDetection/get_face_landmark.py b/FaceLandmarkDetection/get_face_landmark.py

new file mode 100644

index 0000000000000000000000000000000000000000..1ee59679395824add92f2539a5a2bec3443a0ec9

--- /dev/null

+++ b/FaceLandmarkDetection/get_face_landmark.py

@@ -0,0 +1,46 @@

+#!/usr/bin/python #encoding:utf-8

+import torch

+import pickle

+import numpy as np

+import matplotlib.pyplot as plt

+from PIL import Image

+

+import cv2

+import os

+import face_alignment

+from skimage import io, transform

+

+fa = face_alignment.FaceAlignment(face_alignment.LandmarksType._2D,device='cuda:0', flip_input=False)

+

+

+Nums = 0

+FilePath = '../TestData/RealVgg/Imgs'

+SavePath = '../TestData/RealVgg/Imgs/Landmarks'

+if not os.path.exists(SavePath):

+ os.mkdir(SavePath)

+

+ImgNames = os.listdir(FilePath)

+ImgNames.sort()

+

+for i,name in enumerate(ImgNames):

+ print((i,name))

+

+ imgs = io.imread(os.path.join(FilePath,name))

+

+ imgO = imgs

+ try:

+ PredsAll = fa.get_landmarks(imgO)

+ except:

+ print('#########No face')

+ continue

+ if PredsAll is None:

+ print('#########No face2')

+ continue

+ if len(PredsAll)!=1:

+ print('#########too many face')

+ continue

+ preds = PredsAll[-1]

+ AddLength = np.sqrt(np.sum(np.power(preds[27][0:2]-preds[33][0:2],2)))

+ SaveName = name+'.txt'

+

+ np.savetxt(os.path.join(SavePath,SaveName),preds[:,0:2],fmt='%.3f')

diff --git a/FaceLandmarkDetection/setup.cfg b/FaceLandmarkDetection/setup.cfg

new file mode 100644

index 0000000000000000000000000000000000000000..1d5d127316734bcda878fffbbc24c56404911739

--- /dev/null

+++ b/FaceLandmarkDetection/setup.cfg

@@ -0,0 +1,32 @@

+[bumpversion]

+current_version = 1.0.1

+commit = True

+tag = True

+

+[bumpversion:file:setup.py]

+search = version='{current_version}'

+replace = version='{new_version}'

+

+[bumpversion:file:face_alignment/__init__.py]

+search = __version__ = '{current_version}'

+replace = __version__ = '{new_version}'

+

+[metadata]

+description-file = README.md

+

+[bdist_wheel]

+universal = 1

+

+[flake8]

+exclude =

+ .github,

+ examples,

+ docs,

+ .tox,

+ bin,

+ dist,

+ tools,

+ *.egg-info,

+ __init__.py,

+ *.yml