Spaces:

Running

Running

| title: README | |

| emoji: 📉 | |

| colorFrom: green | |

| colorTo: purple | |

| sdk: static | |

| pinned: false | |

| tag: python, c++, x86, deep learning, Neural networks, Edge AI, DL-inference | |

| # OpenVINO Toolkit | |

| ### Make AI inference faster and easier to deploy! | |

|  | |

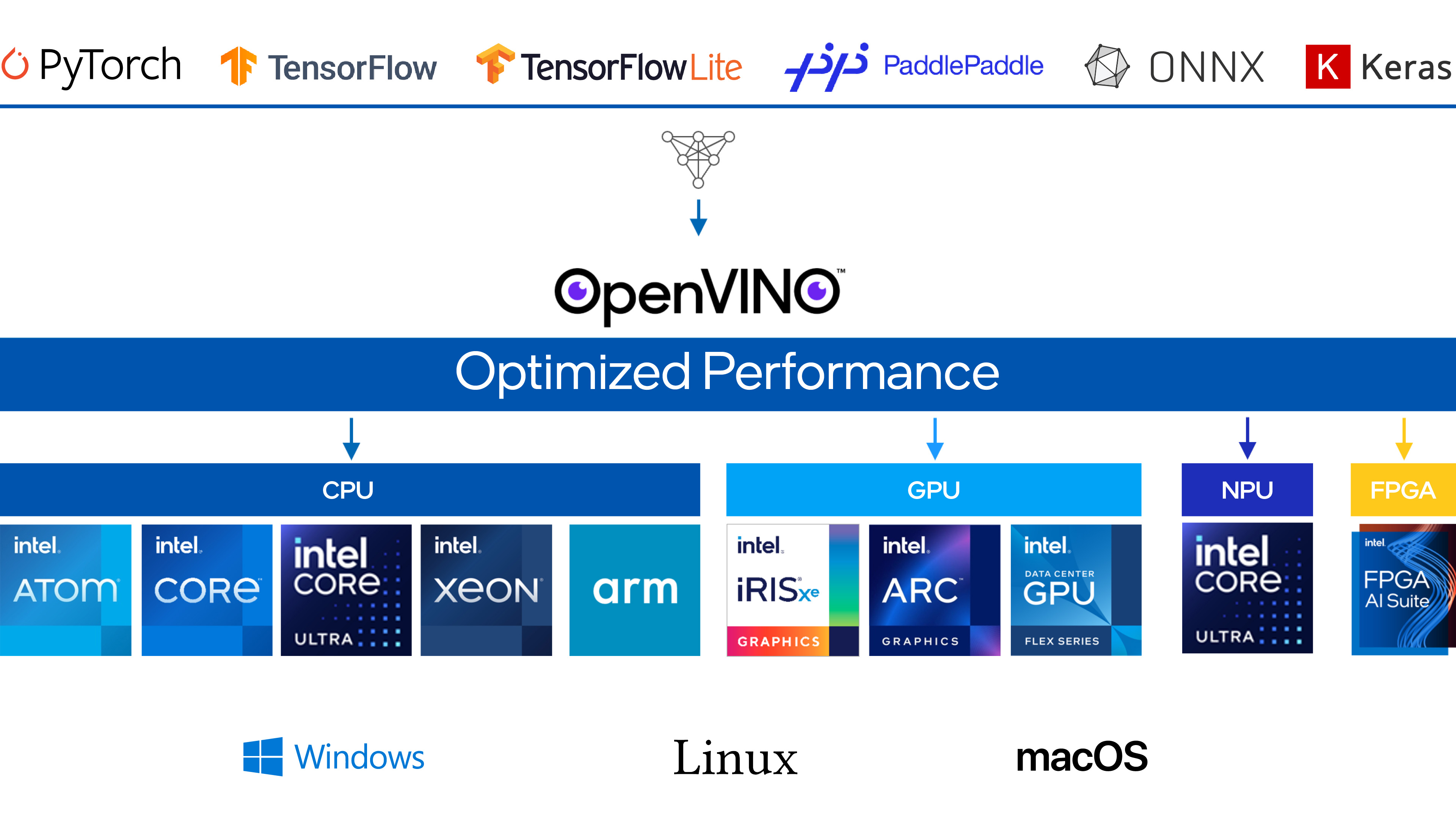

| Learning and practicing AI is not easy, deploying AI in real applications is challenging and hard. We realized that and created | |

| OpenVINO – an open-source toolkit for bringing AI models to life on the most widespread and available platforms like x86 CPUs | |

| and integrated Intel GPUs. One can use OpenVINO to optimize own model for high performance with the most advanced model optimization | |

| capabilities and run those through OpenVINO runtime on various devices. We are driven by the idea to make it easy for a regular person | |

| to learn and use AI with minimal investments and unlock innovation with the use of AI to a wide audience. Not only we allow AI inference | |

| on the most widespread and popular platforms, but also we provide pre-trained models, educational and visual demos, image annotation | |

| tools and model training templates to get you up to speed. Along with good use-case coverage and simple/educational API, OpenVINO | |

| is also a toolkit of choice for AI in production by plenty of companies, it is a fully production-ready software solution. | |